Digital Marketing is often more art than science and reasonable professionals can disagree when looking at the same set of data. Based on this it should be no surprise that we do not agree with every “Best Practice” published by Google. I recently ran into one of these conflicts and I thought I would share it.

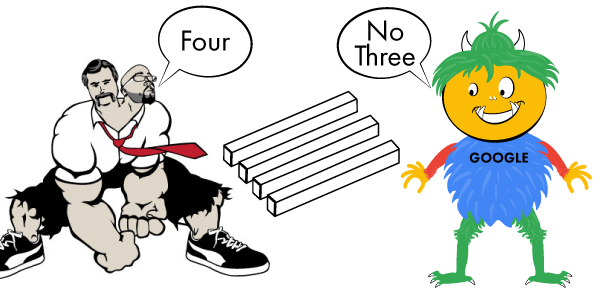

Google has a best practice that includes having 3 or more ads for each ad group and they claim that it improves CTR by 5%. This is followed by another best practice that ad groups should be set to optimize by clicks. The claim here is that this improves CTR by 5% and I accept that this is what they believe and there is no attempt to deceive anyone. It should be noted that I would propose that both of these are the same 5% because you need both of these setting to get the effect. I do not question their data only their conclusion. As you might have guessed I do not agree with this “Best Practice” and here is why.

CTR is a measure of performance that is important to Google because that is how they make their money. Google makes impressions but they sell clicks and the effectiveness of that conversion is expressed in the CTR. The challenge is that for my clients CTR is not the key metric. Their metric is always some form of conversion where business value is created for them. CTR is a measurement on the way to the value creation but it is not the creation itself. This fundamental difference is part of what causes us to look at the data from a different perspective.

Let’s look at the multiple ad copy recommendation first. The reason they get the result they want with multiple ad copy is because they get to pick the rotation method and as they say in Vegas – the house always wins. Odds of finding an ad that increases the CTR increases with the number of ads they have to give the clicks to. The downside to this is that it destroys the AB test that advertisers should be running to find the best copy. The Google algorithm is very fast to pick a winner, much faster than any professional recommendation we have seen on split testing. There is no doubt that at a Big Data level this is a winning process for Google but at the small business level that is not so sure. We believe that our goal should be the best copy not just the best CTR. Good ad copy is designed to get clicks from the right people not just the most people. Remember most people – good for Google; right people – good for advertiser.

Rotation is an area where Google and Advertisers have had conflict in the past. There was a time when Google went beyond recommending rotation and removed the option forcing advertisers to do it their way. The push back from advertisers was so strong that Google backed off and put the option back and made it a best practice not to use it. Not their finest moment but it shows how valuable Google thinks this option is. The reason that we commonly run this with forced rotation is because we are running split tests and we want to make the call as to who the winner is. Google calls a winner much faster than we would recommend. We normally want to see a confidence level over 80% on an AB test before we decide which ad is the winner.

Google believes that CTR is the way to measure performance but they will do conversions, if you have enough data. The problem is that they are not considering all the other variables that are important to your data. We propose that AdWords is a conduit for demand and not a demand generator so the results are subject to outside influences. This is why it takes time to test. It is demand in the mind of the searcher that starts the process and nothing in AdWords Search influences. The best example of this was the Snow Removal Client who tried everything to get his conversions flowing but nothing worked until a huge snow storm hit. Trust me when I tell you there is no “Start A Snow Storm” feature in AdWords.

Testing is a complex process and it is never as easy as a single number. Test results are impacted by many variables and we have seen many conflicting test results. This is where “A” won the first test but lost the second. We often run margin of error tests with exactly the same ads at the same time and we get different results. In margin of error tests, we have found on CTR that a range of plus or minus 10% is common so when someone tells me that something is 5% better; I want to see how big the data are. If your normal CTR is 5% then the real CTR is somewhere between 4.5%-5.5% and anything in that range is too close to call.

Does this mean that Google’s approach is wrong – NO! It just means that there can be different right answers. Google’s method has some strengths and for some accounts it could be the best answer. The key here is to understand the strengths and weakness of each option and then apply the process that is a best fit for your specific situation. In a very broad sense Google Best Practices work best for large high volume accounts but are not always a great fit for small businesses with highly constrained budgets.

Smart professionals with good intent and the same data can come to very different conclusions. This is what makes marketing such an exciting occupation.